Untitled Audio Performance (2021)

Film & performance In collaboration with Berk Özdemir.

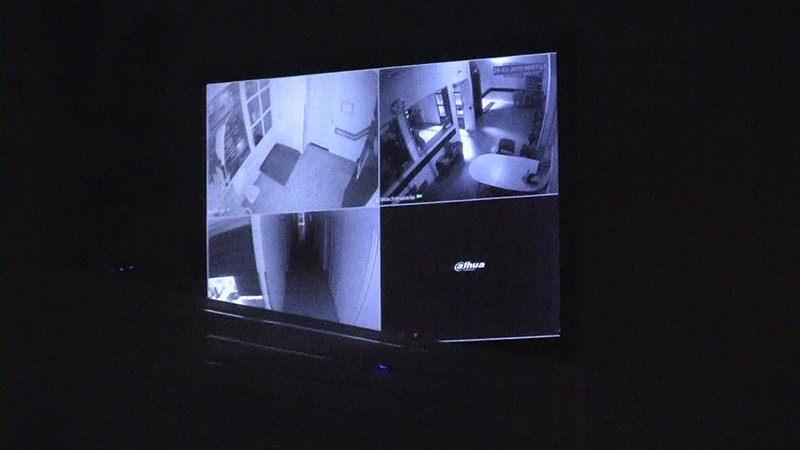

What if we use surveillance to make music?

Using object detection classification with neural networks, my good friend and artist Berk Özdemir and I got tracked by cameras, and the data we produced transformed into sound. So the movement we made in the camera directly influenced sound production, alongside other unexpected participants. We used NVIDIA Jetson Nano, Deepstream SDK.

While we are constantly being watched and analyzed by the machine, the data manifests as a tool for artistic interpretation. Through this film installation, we aim to observe the results of human-machine interaction which themselves become performative objects.