[Workshop]

The Unflattering Dataset of Machine Learning

Ran 4 times: October 2023, January, March, October 2024 (with Casper Schipper).

With Digital Focus module students at Design Academy Eindhoven, Design Art Technology & BEAR students at ArtEZ Arnhem.

Course Brief

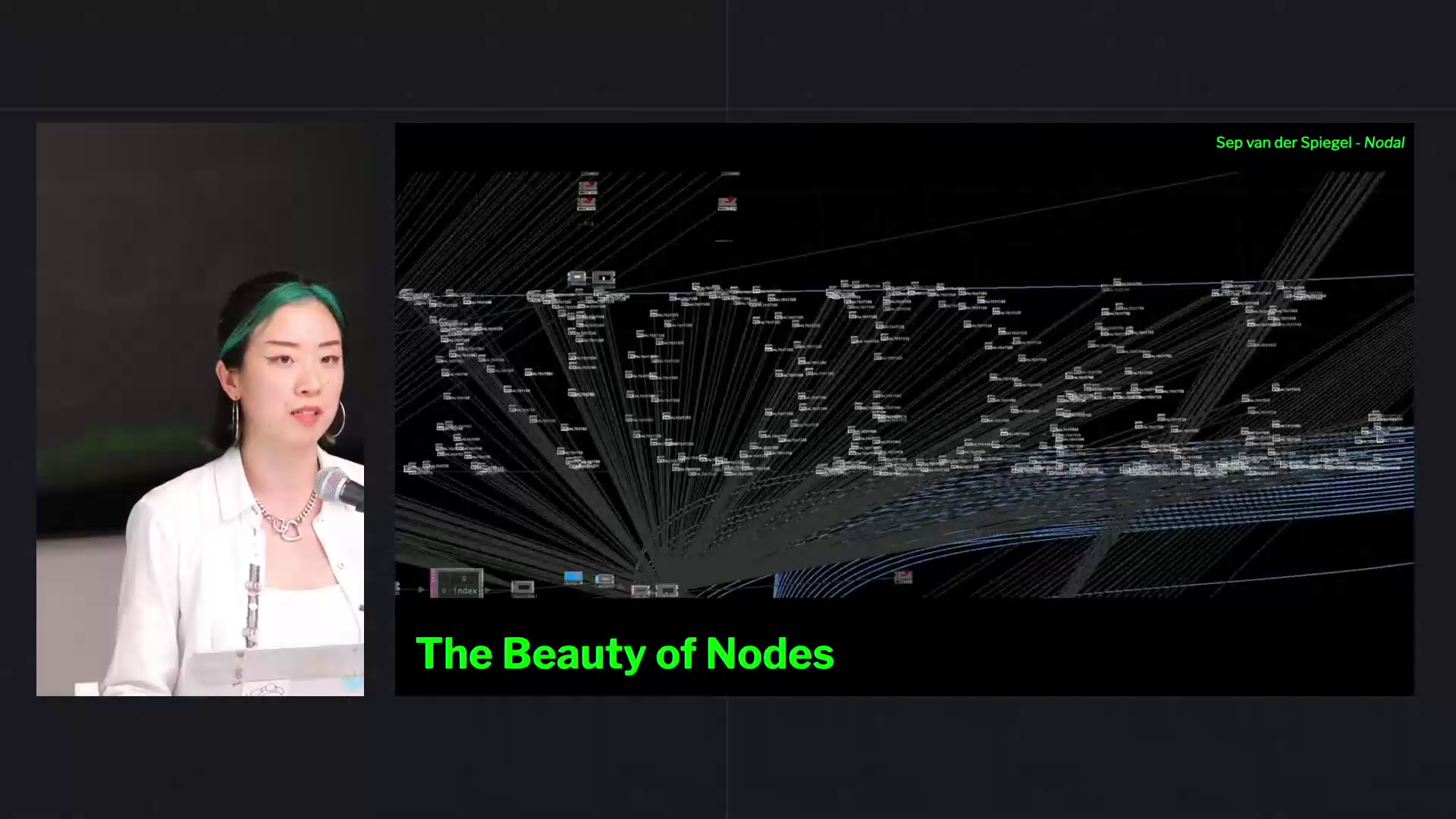

The AI text-to-image models are more and more used to create images by the general public, promoting the outcomes of highly realistic pictures and photos, imitating artists’ originals. As labelling is object and fact-oriented, the process of image classification and labelling is rather binary. Is the dog in this picture located inside or outside? They are also ‘positively’ reinforced by avoiding ‘flaws’ called ‘negative prompts’, which are such as ‘having more than five fingers, less resolution, broken types’, and so on.

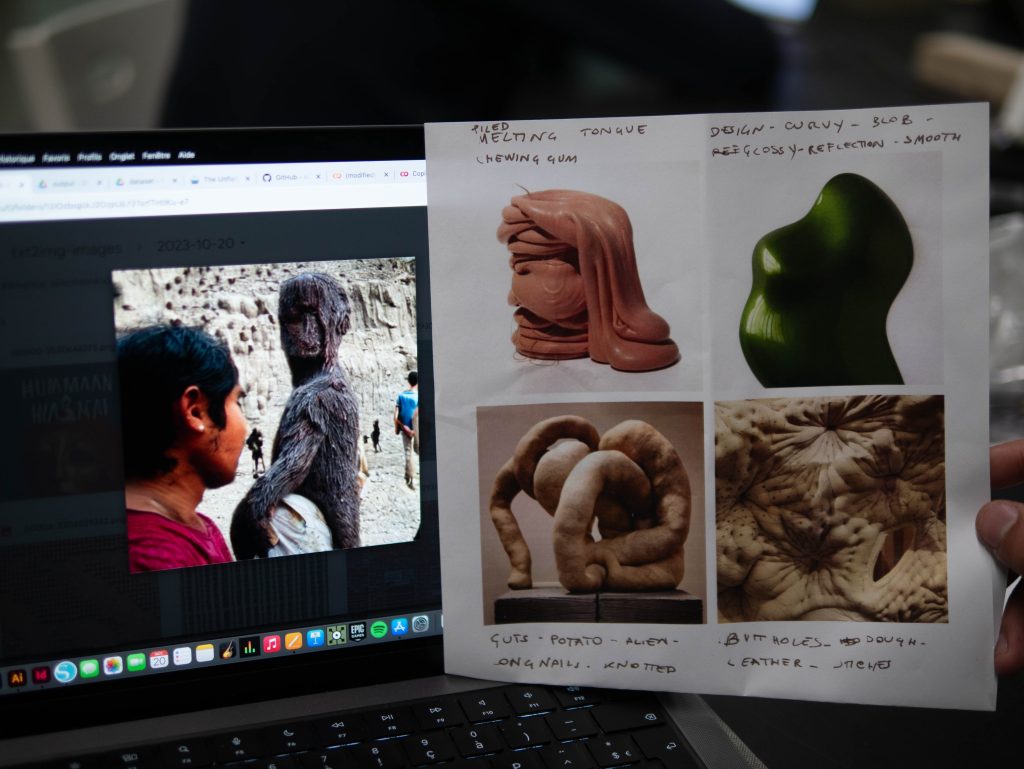

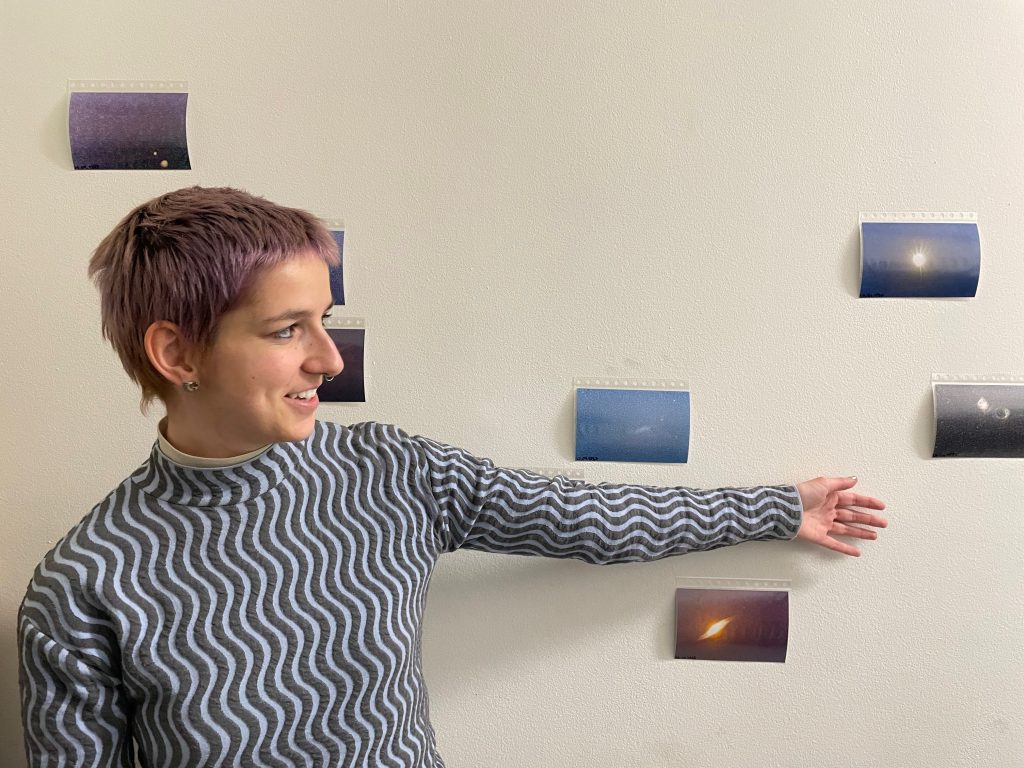

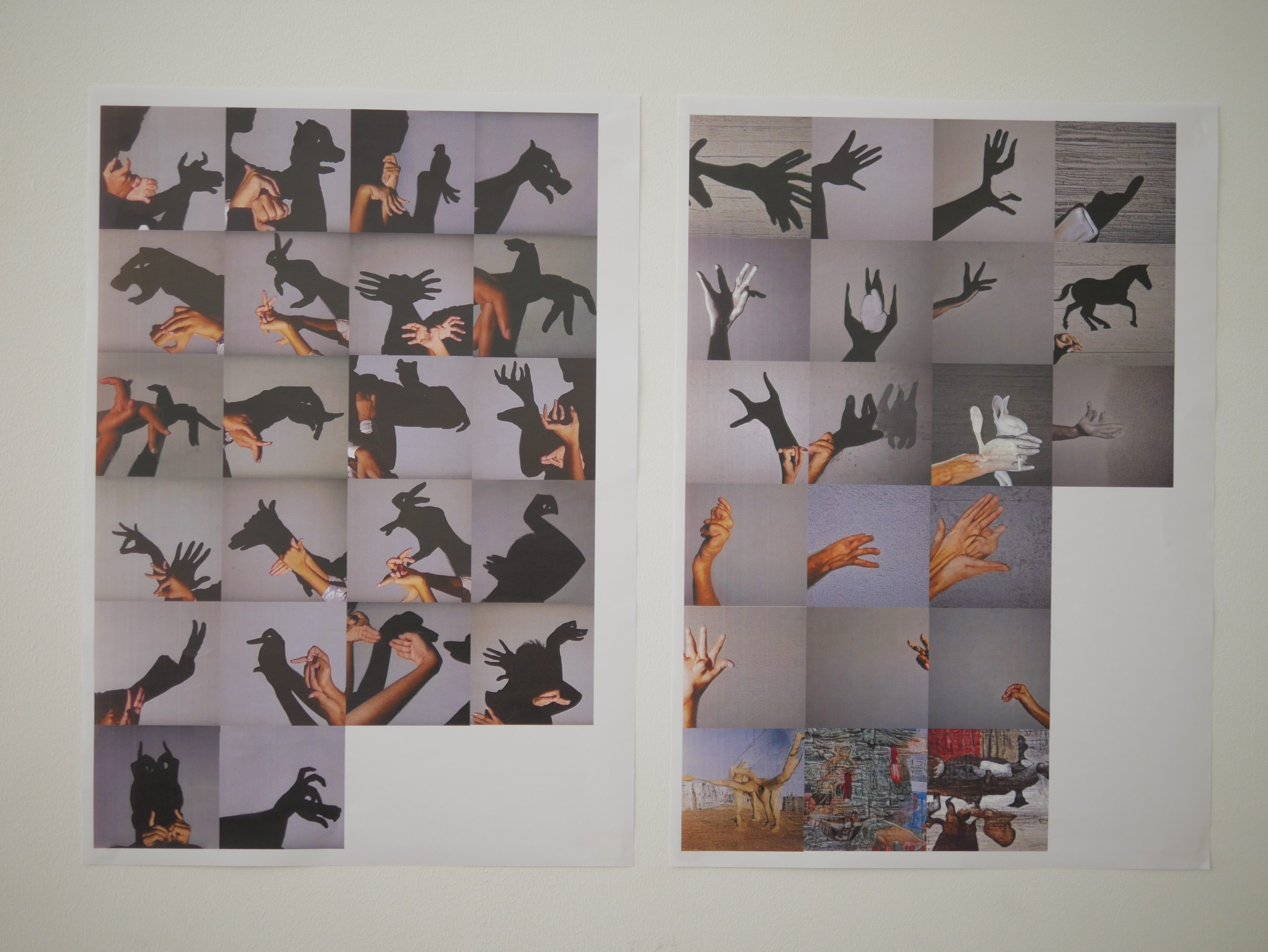

While technically learning to create an in-depth dataset, the students are invited to pick a subject that is ‘unflattering’ to be trained on. And attempt to label and train them. This means creating data that is often not considered important to be included in the public dataset. It can be the subjects that are not easy to describe in words such as abstract concepts, pictures of humans with more than 5 fingers, forgotten old traditions and customs, or personal photos that contain nuanced memories instead of depicting the compositions themselves.

Finally, this workshop is an experiment to observe and reflect on how we feel about doing this mechanical, but non-binary process as a human. What makes us think when we describe such a non-object-oriented picture in words? What forms in our minds when labelling the dataset that contains such personal memories and matters? What point do we hesitate? What synthetic outcomes result from our ‘unflattering’ dataset and how do we understand that?

This is a draft post! I’m still writing about it. More info & photo materials will follow.

In this 3-day workshop, the students theoretically and critically learn the process of making an image collection to train and build their own small, subjective ‘unflattering’ dataset for the text-to-image generative AI model, Stable Diffusion. The students experiment with tools and materialise the subverted notion of generative AI models representing the physical world ‘objectively’.